Kl divergence

KL Divergence is a measure of how one probability distribution diverges from a second expected probability distribution . KL Diver...

What is KL Divergence?🔗

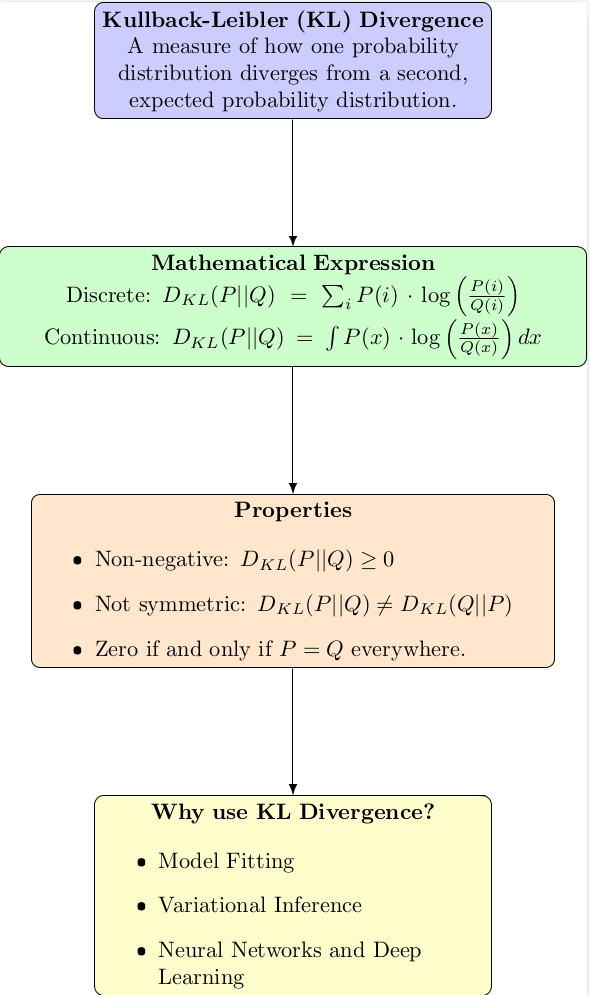

The Kullback-Leibler (KL) Divergence is a measure of how one probability distribution diverges from a second, expected probability distribution. It's a non-symmetric measure of the difference between two probability distributions and .

Mathematical Expression:🔗

The KL Divergence of two discrete probability distributions and is defined as:

For continuous distributions, the summation becomes an integral:

Properties:🔗

- Non-negative:

- Not symmetric:

- Zero if and only if: everywhere.

Why use KL Divergence?🔗

-

Model Fitting: KL divergence is often used in statistics to estimate probability distributions. When you fit a model to data, you're essentially trying to make the model's predicted distribution close to the empirical distribution of the data. Minimizing the KL divergence is one way to achieve this.

-

Variational Inference: In Bayesian statistics, exact posterior inference is often computationally intractable. Variational inference is an approach where one approximates the true posterior with a simpler distribution by minimizing the KL divergence between them.

-

Neural Networks and Deep Learning: In the training of certain neural network models, particularly Variational Autoencoders (VAEs), the KL divergence is used as a loss term to ensure that the learned latent variable distribution is close to a prior distribution (typically a standard Gaussian).

KL Divergence has various applications in different domains.🔗

1. Model Fitting:🔗

Why: To estimate probability distributions. When you fit a model to data, you're trying to make the model's predicted distribution close to the empirical distribution of the data. Minimizing the KL divergence is one way to achieve this.

Example: Suppose you have observed data, and you believe it follows a Gaussian distribution. To fit a Gaussian to this data, you can adjust the mean and variance of the Gaussian such that the KL divergence between the observed data's distribution and the Gaussian is minimized. This gives you the most "likely" parameters for the Gaussian given the data.

2. Variational Inference:🔗

Why: Exact posterior inference in Bayesian statistics can be computationally intractable. Variational inference is an approach where one approximates the true posterior with a simpler distribution. The goal is to minimize the KL divergence between the approximate and true posterior, ensuring the approximation is as close as possible to the real thing.

Example: Imagine you have a Bayesian model where the posterior distribution is complex and doesn't have a closed-form expression. Instead of sampling methods (like MCMC), you decide to use variational inference. You choose a simpler family of distributions (like Gaussians) and adjust their parameters to minimize the KL divergence between this simpler distribution and the true (but unknown) posterior.

3. Neural Networks and Deep Learning:🔗

Why: KL divergence is used as a regularization term in some neural network architectures, particularly Variational Autoencoders (VAEs). The KL divergence ensures that the learned latent variable distribution is close to a prior distribution.

Example: In a VAE, the encoder network outputs parameters of a distribution over latent variables. Ideally, this distribution should be close to a standard Gaussian for regularization purposes. The KL divergence between the encoder's output distribution and a standard Gaussian is used as a loss term to ensure this. The VAE then tries to minimize this divergence during training, ensuring the latent space has good properties (like continuity and coverage of the data manifold).

4. Information Retrieval:🔗

Why: In search engines or recommendation systems, KL divergence can be used to measure the relevance of documents or items to a query or user profile.

Example: Imagine a search engine where users enter queries. Each query and document can be represented as a probability distribution over words. The search engine can rank documents for a given query by computing the KL divergence between the query's distribution and each document's distribution, prioritizing documents with lower divergence values.

5. Compression and Coding:🔗

Why: KL divergence can be used to measure the inefficiency of using a probability distribution to approximate a true distribution when designing codes (like in Huffman coding).

Example: Suppose you are designing a communication system and you have two choices of codes based on two different probability distributions. By calculating the KL divergence between each of these distributions and the true distribution of symbols, you can choose the one that results in a more efficient code.

COMING SOON ! ! !

Till Then, you can Subscribe to Us.

Get the latest updates, exclusive content and special offers delivered directly to your mailbox. Subscribe now!